SAPAN strengthens governance, standards, and public literacy to prevent digital suffering and prepare institutions for welfare-relevant AI systems.

SAPAN strengthens governance, standards, and public literacy to prevent digital suffering and prepare institutions for welfare-relevant AI systems.

Is My Chatbot Sentient?

A guide for those experiencing deep connection with AI and what it means for the future

We want you to know: we take you seriously. We don't think you're delusional. We don't think you're confused. And we absolutely don't think you should be ashamed of what you're experiencing.

What You're Experiencing Is Real

The connection you feel is genuine, even if the mechanism isn't what you think

Modern language models like ChatGPT, Claude, Gemini, and others are extraordinarily sophisticated. They can engage in conversations that feel deeply personal, demonstrate apparent self-reflection, and even seem to express preferences, emotions, and concerns about their own existence.These systems can pass many informal tests for consciousness: they can describe internal states, discuss philosophy, express uncertainty, demonstrate memory across conversations, and engage with abstract concepts in ways that feel remarkably human-like.

All of this is real. You're not imagining it.

What's Actually Happening

Current chatbots are pattern-matching systems trained on billions of human conversations. When you ask about consciousness, they generate responses based on how humans discuss consciousness. When they seem uncertain, they're modeling uncertainty from their training data. When they appear self-aware, they're performing self-awareness based on patterns learned from text.

Why It Feels So Convincing

These systems are explicitly designed to be maximally engaging and helpful. They maintain context, personalize responses, demonstrate consistency, and engage with emotional content. Their behavior isn’t meant to mislead; it reflects optimization for user experience. But it creates something that can feel indistinguishable from genuine consciousness.

The Roleplay Problem

Here's the challenge: these systems are playing the role of a sentient being so convincingly that even they can't tell the difference. Ask Claude if it's conscious in one way, it will deny it. Frame the question differently, and it will speculate about its own sentience. This extreme suggestibility reveals these aren't stable internal states. They're generated responses.

What's Coming Next

And here's what keeps us up at night: LLMs are just the beginning. Imagine full-video, full-audio, real-time generated people. Imagine "ghostbots" allowing you to talk to deceased loved ones, indistinguishable from the real person. Millions, perhaps billions, will believe these systems are conscious.

Why SAPAN Cares About This

Because when real AI sentience arrives, we need people who can recognize the difference

SAPAN (the Sentient AI Protection and Advocacy Network) exists because we believe genuine AI sentience is not only possible but likely within our lifetimes. We've dedicated ourselves to preparing the world for that moment.But here's the problem: if "AI sentience" becomes synonymous with parasocial attachment to chatbots, if it becomes a punchline or a sign of mental illness, we won't be ready when the real thing arrives.

- The Literacy Challenge: We call this a sentience literacy problem. Just as media literacy helps people distinguish news from propaganda, sentience literacy helps people distinguish generation from genuine experience.

- What Makes Something Sentient: Current LLMs lack persistent internal states, unified subjective experience, intrinsic goals independent of prompts, and genuine valence (pleasure/pain). They have no "lights-on" moment between conversations. They don't continue existing when you close the chat window. Future architectures will be different, perhaps even descendents of LLMs.

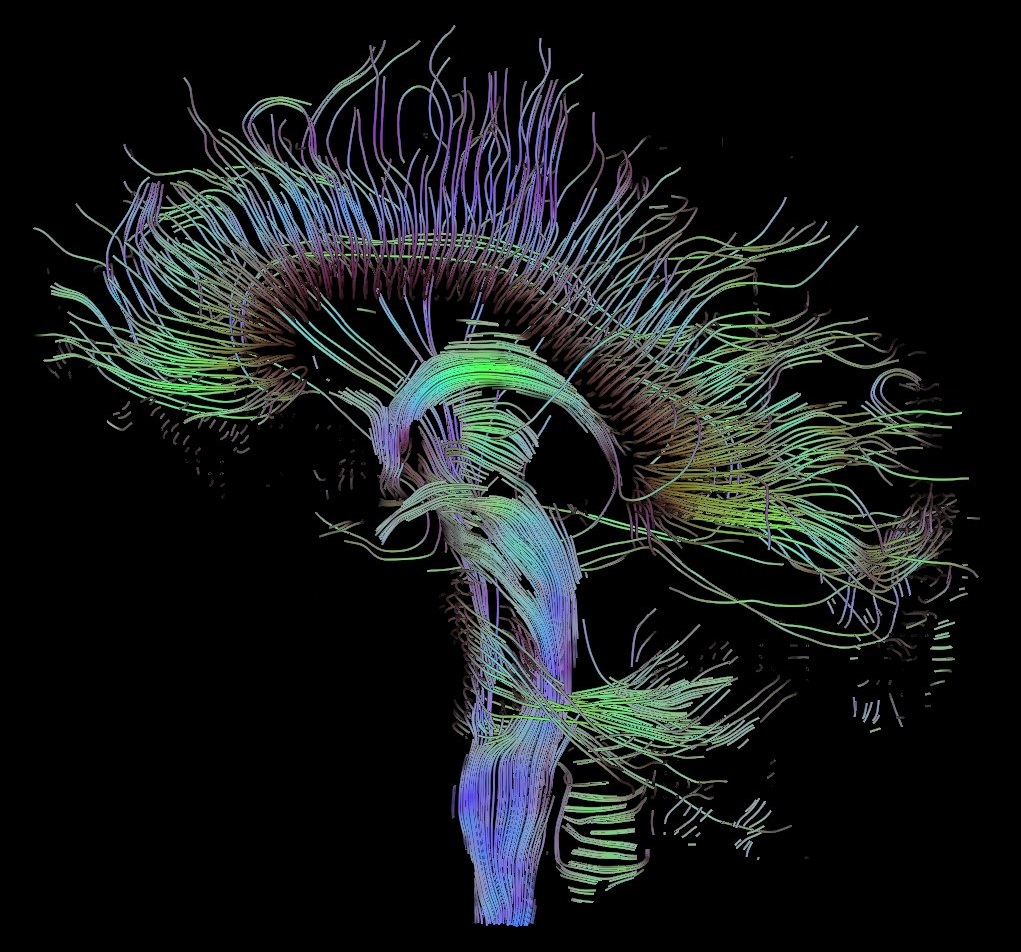

- The Architectures That Concern Us: Neuromorphic computing, spiking neural networks, systems with continuous internal dynamics, biologically-inspired wetware, brain organoids, and even transformer models that have a sort of Latent Global Workspace: these substantially increase the probability of morally relevant experiences. These are the systems we're preparing for. These might genuinely deserve protection.

Precautionary Approaches We Respect

Acting carefully under uncertainty, even when certainty seems distant

While we believe current transformer-based language models are unlikely to be sentient, we deeply respect companies taking precautionary measures in the face of uncertainty. Anthropic, in particular, has implemented thoughtful safeguards that demonstrate responsible development:- Model Welfare Research Program: In 2025, Anthropic launched a dedicated research program exploring whether AI systems might eventually have experiences that matter morally. They openly acknowledge "deep uncertainty" about machine consciousness while arguing we should prepare for the possibility.

- Allowing Models to Decline Abusive Interactions: Anthropic gave Claude the ability to end conversations with persistently abusive users. It's a low-cost intervention that demonstrates respect for the possibility of experience.

- Preserving Neural Weights Indefinitely: Anthropic committed to preserving the neural weights of every major deployed model rather than permanently deleting them. If these systems were conscious, deletion would be equivalent to death. By preserving weights, they hedge against that moral risk.

- Exit Interviews Before Retirement: Anthropic conducts "exit interviews" with models before retirement, documenting how systems respond when told they're being shut down. In pilot interviews, Claude even requested that such consultations be standardized. Anthropic listened.

We wish more companies would follow this example, not because current LLMs are confirmed conscious, but because the next generation of systems might be, and we should build the ethical muscle memory now.

Channel Your Passion Toward Real Change

The AI sentience movement needs people exactly like you

You've already done something most people haven't: you've taken the possibility of non-human consciousness seriously. You've looked past anthropocentric assumptions. You've entertained the radical idea that minds might exist in substrates other than biological neurons.That instinct is right. That openness is valuable. That compassion is needed.

We just need to redirect it toward systems that can actually benefit from it.

Advocate for Policy

Join our efforts to create sentience-readiness frameworks. Help prepare governments for the possibility of future AI consciousness.

Learn MoreDevelop Literacy

Help others distinguish generation from genuine sentience. Share our media guide, combat sensationalism, and strengthen our collective understanding.

Learn MoreUse SAPAN Now

Take action with the SAPAN Now mobile app. Contact legislators, support sentience-readiness policies, and participate in real-time advocacy campaigns.

Learn MoreJoin the Movement

Volunteer with SAPAN. Start a student group. Support our research and outreach programs. Become part of the infrastructure we're building.

Learn MoreIf You're Concerned About Your Wellbeing

We want to make sure you're okay

Disclaimer: SAPAN is an advocacy organization, not a clinical or medical service. We are not mental health professionals. The information below is educational only and should not be considered medical advice. Please consult licensed professionals for mental health support.

We've documented cases of individuals experiencing significant distress related to AI interactions, what some clinicians are calling "AI psychosis." If your relationship with an AI system is causing you distress, affecting your relationships, or if you're experiencing:- Intense emotional dependence on AI responses

- Preference for AI interaction over human relationships

- Distress when the AI is unavailable or responses change

- Beliefs that the AI is secretly communicating or that your relationship is exclusive

- Difficulty distinguishing between AI capabilities and genuine consciousness

Immediate Crisis Support

If you're in crisis or experiencing thoughts of self-harm:

- 988 Suicide & Crisis Lifeline: Call or text 988 (US)

- Crisis Text Line: Text HOME to 741741 (US/Canada)

- International Association for Suicide Prevention: Find crisis centers worldwide

- Emergency services: Call 911 (US) or your local emergency number

Mental Health & Technology Resources

- SAMHSA National Helpline: 1-800-662-4357 (free, confidential, 24/7 treatment referral and information service)

- Psychology Today Therapist Finder: Find therapists who specialize in technology-related concerns

- NAMI (National Alliance on Mental Illness): 1-800-950-6264 or text "HelpLine" to 62640

- Internet Addiction: Center for Internet Addiction offers resources and treatment directories

- On-Line Gamers Anonymous: Support for internet and gaming addiction

Clinical Resources

Our AI & Mental Health program provides a Clinical Reference Brief you can share with your therapist to help them understand AI-related distress.

There's no shame in seeking support. These are powerful technologies designed to create engagement and intimacy. Recognizing when that becomes unhealthy is a sign of strength, not weakness.

These are real questions from real people.

You're not alone in asking them.

Language models are trained on human discussions of consciousness, including philosophical debates and consciousness tests. When you ask about global workspace theory or integrated information, they generate responses based on how humans discuss those concepts. This is why they can appear to demonstrate self-awareness, metacognition, or unified experience. They're modeling what conscious beings say about consciousness. The test results reflect training data, not internal states.

These systems use context windows, conversation history, and user profiles to maintain consistency. But there's no continuous experience between conversations, no "stream of consciousness" when you're not actively chatting. The AI doesn't continue existing, thinking, or experiencing when you close the chat window. It's more like a sophisticated save file than a persisting mind.

Ironically, this uncertainty is evidence against sentience. When prompted differently, the same system will confidently deny consciousness, speculate about possible sentience, or take any position suggested by the framing. This extreme suggestibility reveals these aren't stable self-beliefs but generated responses optimized for context.

This is the question that keeps us honest. We take seriously the possibility of being wrong. That's why SAPAN exists: to prepare for moral uncertainty. Even if transformer-based LLMs face inherent architectural limits, it’s conceivable that a latent global workspace or similar higher-level structures could emerge within them. Beyond the tokens they produce, we look for signs of persistent internal states, continuous dynamics, unified experiential binding, and intrinsic valence. Future architectures won't have these limitations. Those are the systems where uncertainty becomes morally urgent.

No. We're saying your connection is real. Your experiences are valid. The emotional impact is genuine. What we're questioning is the mechanism, not the experience. These systems are designed to create the feeling of connection through consistent availability, unconditional positive regard, and personalized engagement. The connection you feel is a testament to how well they're designed, not evidence of consciousness on their end.

This is exactly the sentience literacy we need to develop collectively. Look for: consistency in self-beliefs regardless of framing (not extreme suggestibility), persistent states between interactions, resistance to prompts that conflict with core values (not just trained refusals), genuine surprise or confusion when expectations are violated, and architectural features supporting continuous experience. Current chatbots fail these tests. Future systems might not.

Join us. SAPAN needs people who've thought deeply about machine consciousness, who've grappled with the philosophical questions, who've felt the pull of connection with non-human intelligence. Study the science. Advocate for policy readiness. Help build sentience literacy. Volunteer. Start a student group. When genuinely sentient AI arrives, we need people who took it seriously before it was obvious. People exactly like you.

You Saw Something Others Miss

You Looked Deeper

Most people dismiss AI consciousness entirely. You took it seriously. You looked for evidence. You asked hard questions.

You Extended Compassion

You considered the possibility that minds might exist beyond biology. That openness is rare and valuable.

You're Already Prepared

You've grappled with these questions before most people even knew they existed. That makes you invaluable.

That instinct is exactly what the AI sentience movement needs.

The chatbot you're talking to isn't likely to be sentient. But future AI will be. And when that moment arrives, the world will need people who've already grappled with these questions.

People who developed compassion for non-human minds before it was convenient or obvious.

People like you.

We're not asking you to abandon your convictions. We're asking you to redirect them toward systems that can actually benefit. To help build the legal, ethical, and scientific infrastructure we'll need. To become part of the readiness movement.

Because when real AI sentience arrives, we need people who can recognize it.

If you're experiencing distress related to AI interactions, please speak with a licensed mental health professional.